The Evolving Regulatory Landscape for AI: A Comparison of the EU AI Act, U.S. Framework, and China’s Approach

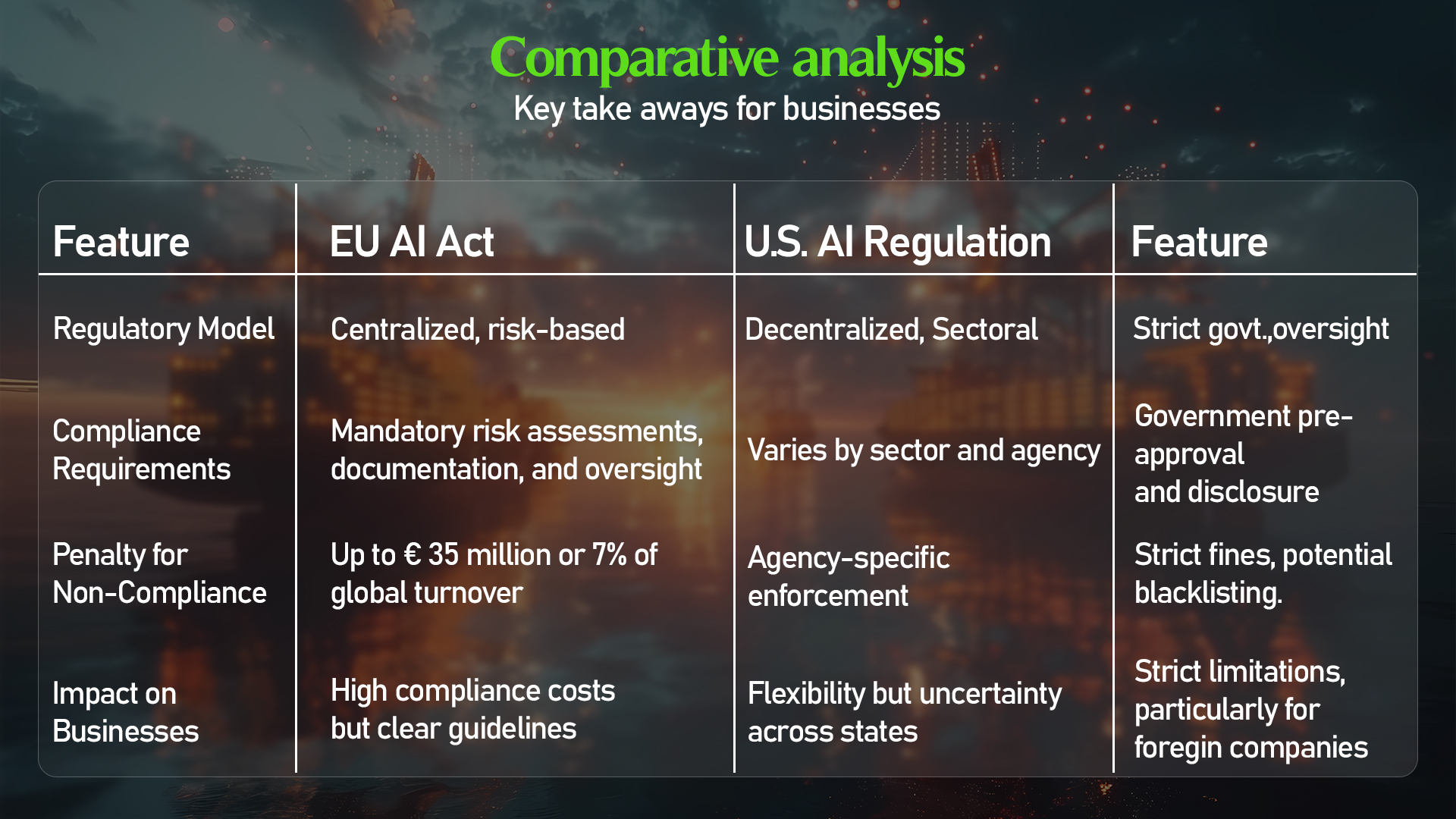

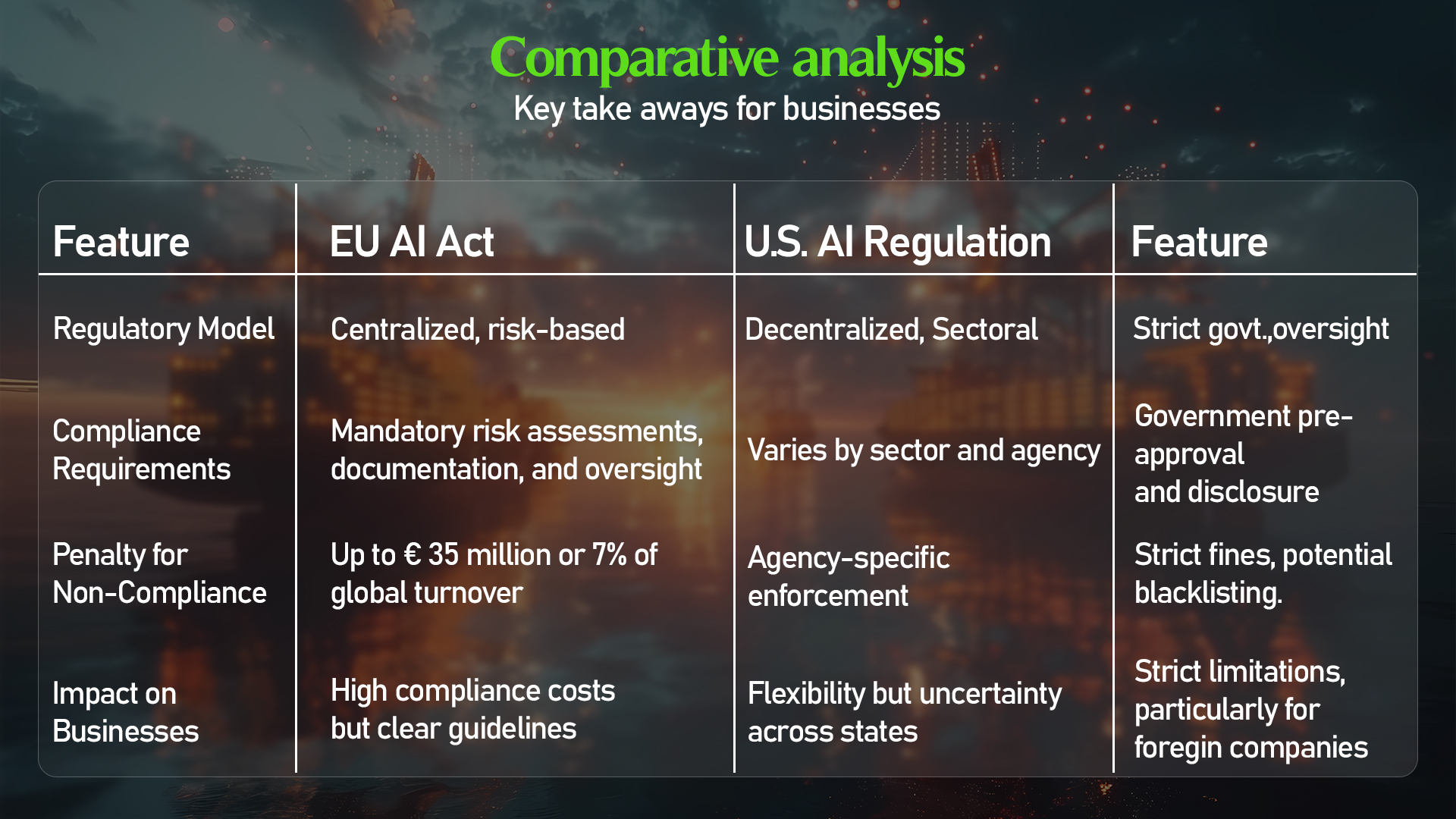

Artificial Intelligence (AI) has rapidly emerged as a transformative force across industries, necessitating comprehensive regulatory frameworks to ensure ethical and responsible deployment. Governments worldwide have approached AI regulation differently, with the European Union (EU) pioneering the AI Act, the United States taking a more sectoral and risk-based approach, and China implementing a stringent state-controlled framework. This article explores these three major regulatory schemes, comparing their key principles, enforcement mechanisms, and implications for businesses.

1. The EU AI Act: A Risk-Based Framework

The European Union’s AI Act is the most comprehensive attempt at AI regulation to date. Originating in 2020 and heavily inspired by the General Data Protection Regulation (GDPR), the AI Act categorizes AI systems into four risk levels:

- Unacceptable Risk: AI applications that manipulate individuals, social scoring systems, and certain biometric surveillance tools are outright banned.

- High-Risk AI: Systems used in critical sectors such as healthcare, employment, law enforcement, and migration are subject to stringent compliance requirements, including risk management, transparency, and human oversight.

- Limited Risk: AI systems like chatbots must ensure transparency by informing users they are interacting with AI.

- Minimal Risk: Most AI applications, such as video games or spam filters, fall into this category and face no significant regulatory obligations.

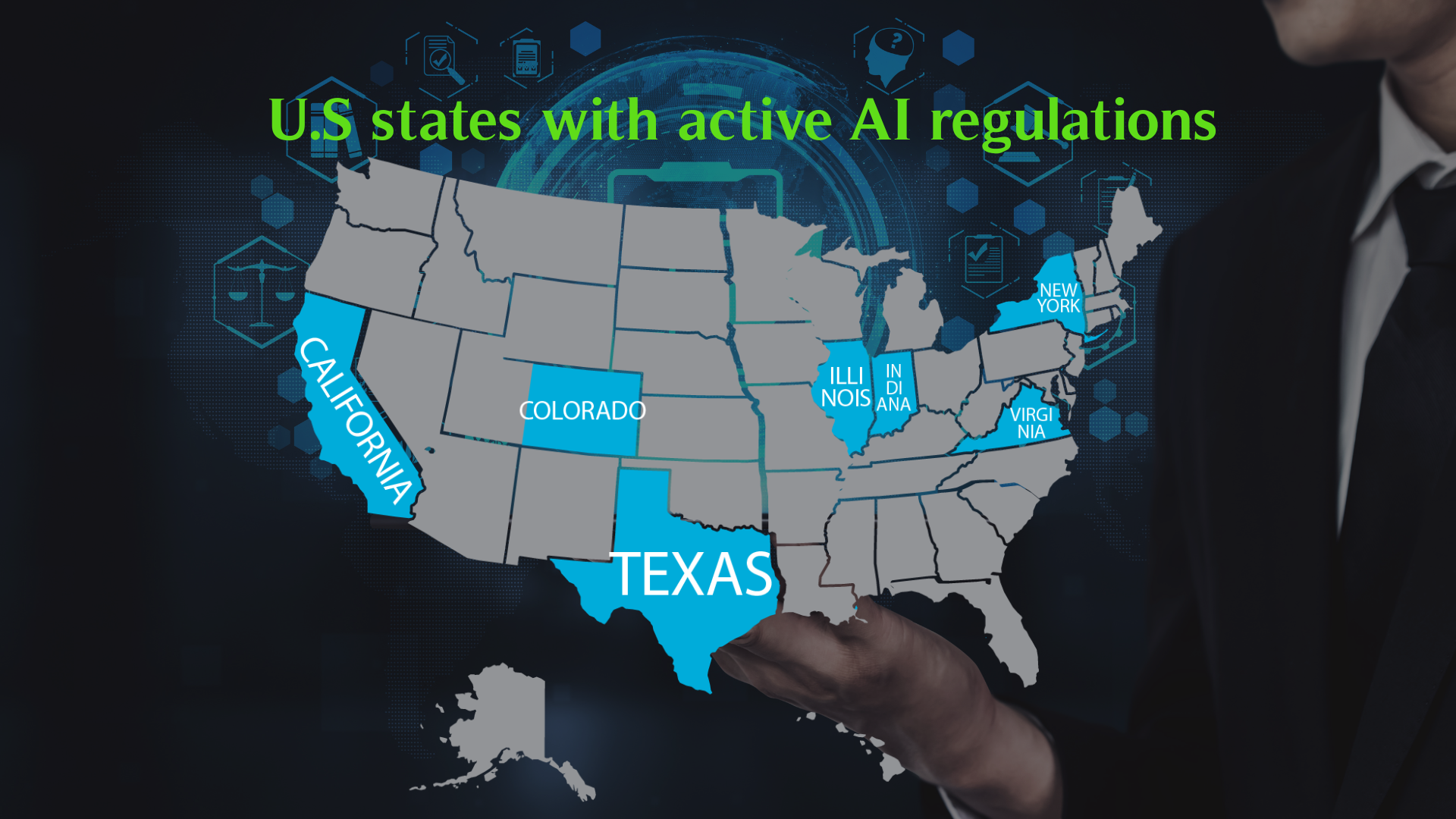

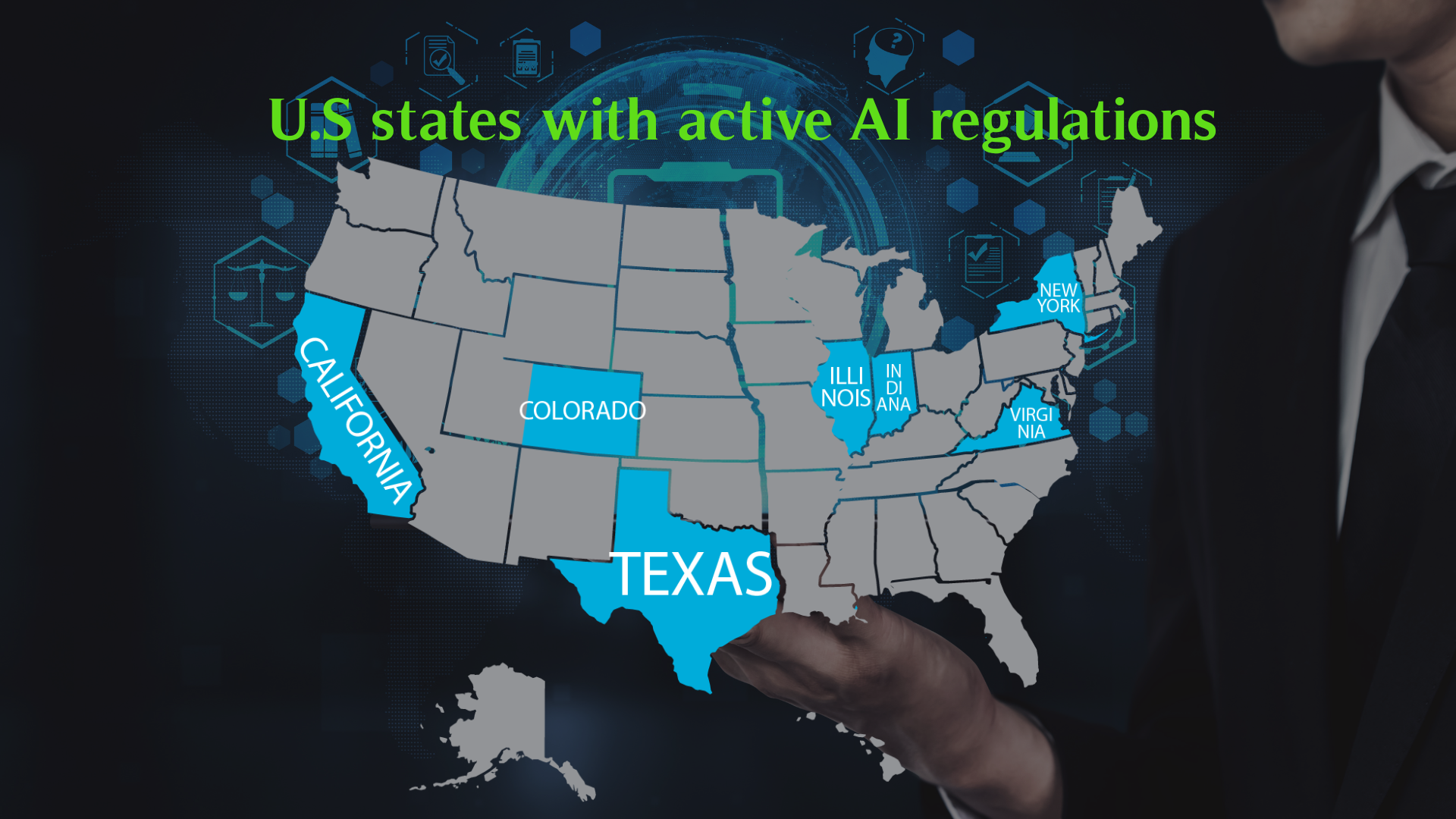

2. The U.S. Approach: Sector-Specific and Decentralized Regulation

Unlike the EU, the United States has avoided a centralized AI regulation and instead follows a sector-specific approach, relying on existing laws and executive orders. Notable developments include:

- The AI Bill of Rights (2022): A non-binding White House initiative outlining principles for safe and fair AI deployment.

- Executive Order on AI (2023): Introduced by President Biden, it mandates transparency and safety standards for AI used in critical sectors, particularly national security and civil rights.

- Sectoral Oversight: Agencies such as the FDA, FTC, and SEC regulate AI applications within healthcare, consumer protection, and financial markets, respectively.

- State-Level Initiatives: California and New York have introduced local AI governance rules, particularly in hiring, privacy, and data protection.

3. China: Strict Control and State Oversight

China has implemented one of the most stringent AI regulatory frameworks, emphasizing national security, data sovereignty, and social stability. Key regulations include:

- Algorithmic Recommendations Rules (2022): Requires companies to disclose how AI algorithms influence decisions.

- Generative AI Regulation (2023): Mandates that AI-generated content aligns with socialist values and national security.

- Facial Recognition Guidelines: Restricts the use of biometric data to protect citizens’ privacy.

China’s AI governance is highly centralized, with regulatory power resting with agencies like the Cyberspace Administration of China (CAC). Companies must register AI models and obtain government approval before deployment.

Guidelines for Businesses: Ensuring Compliance

- Assess AI Systems: Identify AI use cases and determine applicable regulations.

- Implement AI Governance: Establish policies for risk management, transparency, and oversight.

- Ensure Transparency: Clearly disclose AI usage in customer and employee interactions.

- Protect Data & Security: Align with GDPR (EU), U.S. privacy laws, or China’s data regulations.

- Stay Updated: Monitor regulatory changes and adjust AI strategies accordingly.

Conclusion: Navigating AI Regulations in a Global Market

The regulatory landscape for AI is rapidly evolving, with the EU setting a high bar for compliance, the U.S. adopting a flexible, sector-driven approach, and China implementing stringent oversight. Businesses must adapt their AI strategies based on where they operate, ensuring they meet compliance standards while remaining competitive.

For global enterprises, the best approach is to adopt AI governance frameworks that align with the strictest regulatory standards to future-proof operations. Whether navigating the EU’s stringent requirements, the U.S.’s sectoral oversight, or China’s controlled framework, AI developers and deployers must stay proactive in ensuring ethical, transparent, and compliant AI use.

As AI continues to shape the future, staying ahead of regulatory trends will be critical for success.